We don’t fully understand copy protection. It’s mostly expensive, never flawless, and there’s even scam/conspiracy rumors around it.

Copy protection is an intricate affair. At times misunderstood, misused or abused, despite standardization efforts there’s still confusion regarding schemes, providers, what browser supports what etc.

May you start worrying about your content being compromised, liberally copied, or just inadvertently seen by unauthorized viewers, looking into a solution will probably soon send you into the arms of a DRM provider. Not that there’s anything wrong with it, but DRM is still pricey and not turnkey, so it may have your team put up with a steep learning curve.

Somewhat overlooked, the simpler flavours of content encryption (i.e. MPEG-CENC and HLS AES) still do a good job. Cryptography is just as strong, you can employ key rotation, and it costs nothing at all. You will however need to manage the keys yourself, inclusive of securing access to them. And also, unlike a real DRM, you won’t be able to allow your users to watch content offline, but that’s not really an issue for live video.

In case you wonder, no protection is bulletproof. Provided enough determination and funds/skill, any scheme is ultimately vulnerable. Rather than trying to be perfect, safeguarding efforts are aimed at one or more of the following:

- Prevent eavesdropping of content while it travels from source to destination via the open internet

- Prevent clandestine viewing

- Prevent content sharing by users properly authorized to see it

- Make it harder or unfeasible to reverse engineer the encryption and obtain a digital copy of the original video by authorized viewers

- Accomplish as much of the above, while maintaining as much of the user base as possible (some technologies may not be compatible with end-user devices etc)

- Stay within budget and remain competitive

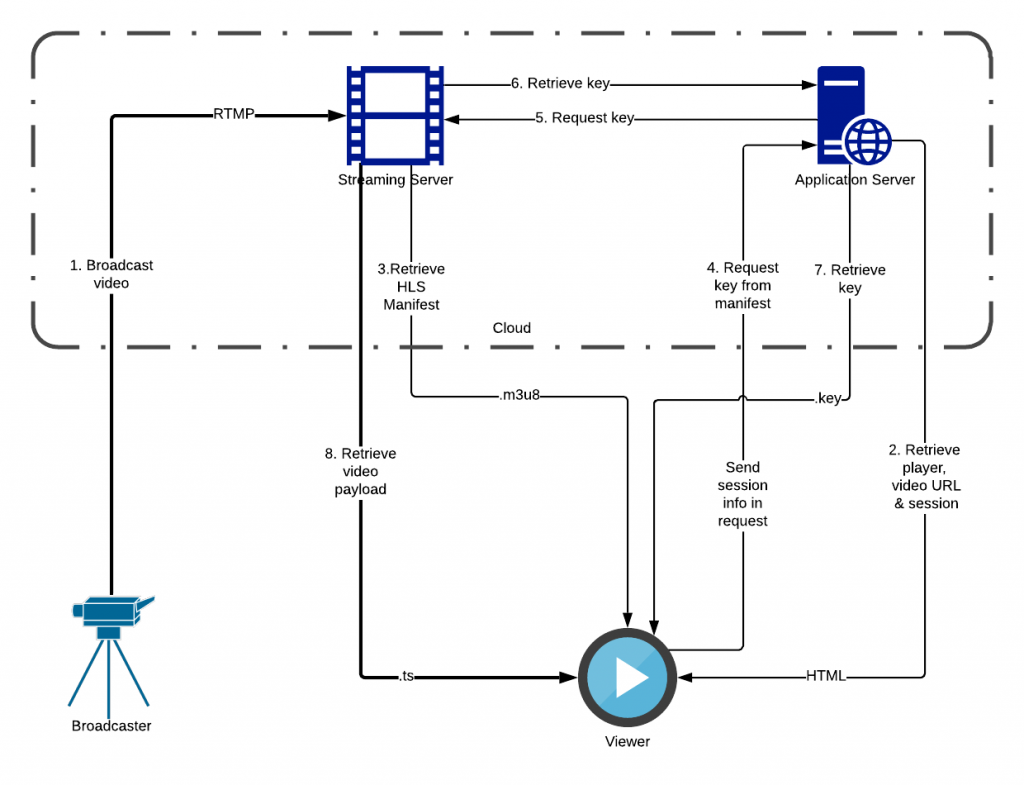

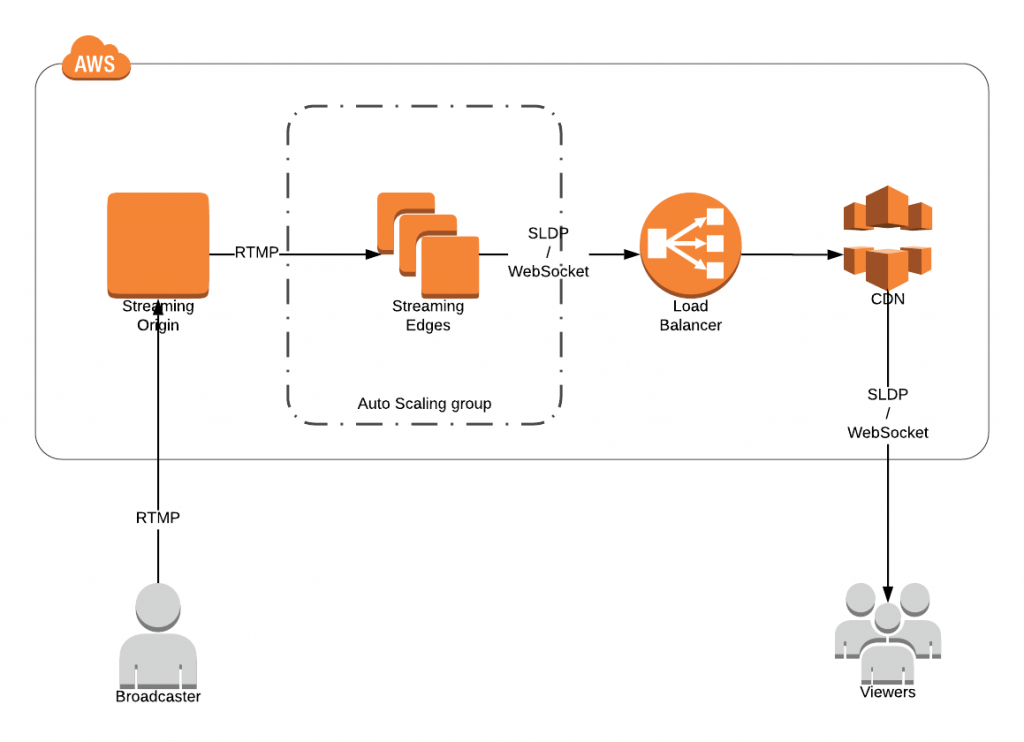

The cheap and easy solution I put together to be used out of the box makes use of HLS AES. It builds on the simple live streaming platform, adding encryption and key management. Rather than employing a dedicated key server, it just uses the application’s own environment to handle the keys; this greatly simplifies the workflow as the requests for a key will carry the session data (i.e. cookie) and that lets you instantly identify “who” is asking for the content.

At a glance, the architecture/flow looks like this

The proof of concept merely ensures that only players accessing the video via your website/platform are able to consume the content. Any attempt to ‘hotlink’ to the .m3u8 URL dug up in the page source will fail to play, due to access to the decryption key being denied to any but the legit viewers.

This is already a huge gain, as it will effectively block your content from being leached not just through browsers and apps, but also via VLC and stalker links, potentially saving you a lot on the traffic bill. But there’s more, the same session check mechanism can be employed to securely filter access to individual pieces of content to authorized viewers only, i.e. for a live pay-per-view platform.

Is it fast?

As compared to its non-encrypted counterpart, there is extra effort to encrypt/decrypt the content and distribute/retrieve the keys.

Yet being that the encryption is symmetric, and the key size is fairly short (128 bit), this is less computationally intense than HTTPS, which is a breeze to virtually all modern servers and devices.

The need for the player to download a key may delay the video start time by a roundtrip, but I’ve seen many downloading both the key and the payload (which is larger therefore slower) in parallel so no delay in the big picture.

Overall, I’d say there’s some added latency but that’s negligible, if done right, for all but a few corner case implements.

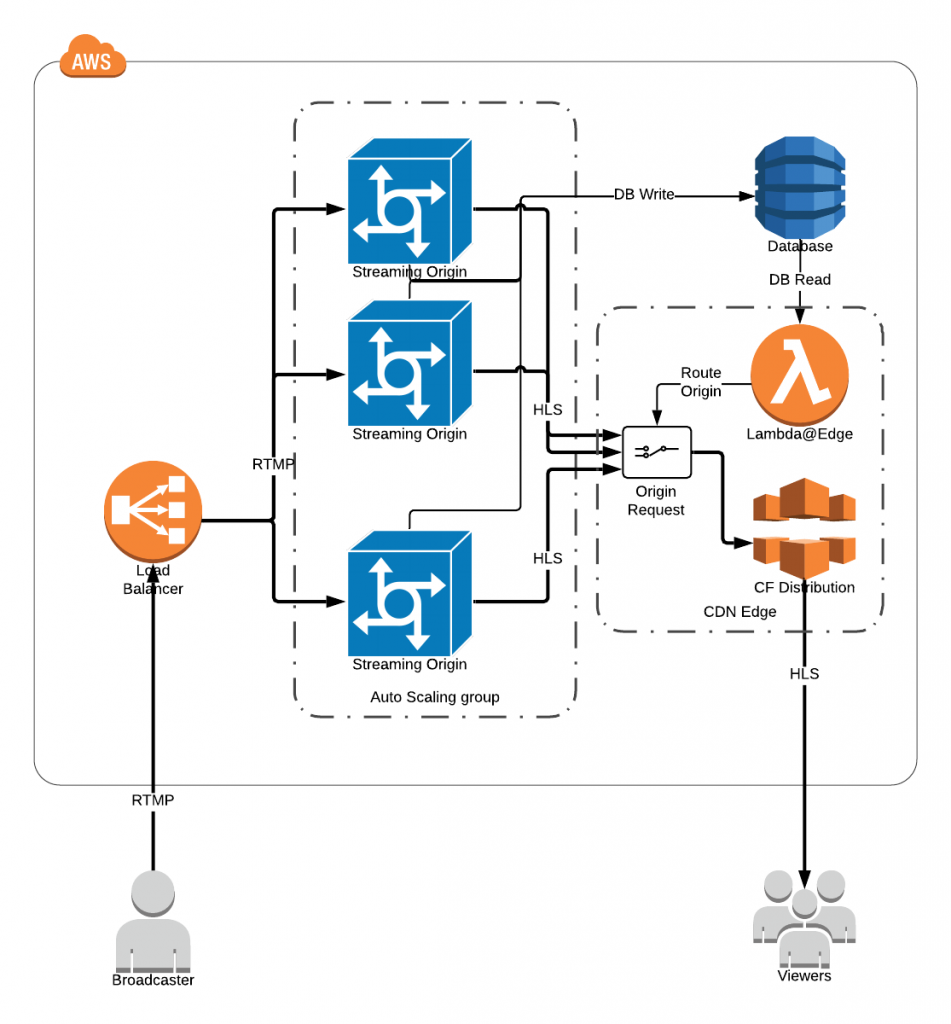

Does it scale?

Kind of 🙂

HLS manifest and payload (.ts) are identical for all viewers and can therefore be cached and distributed via CDNs, no issues here.

Keys, on the other hand, get rotated and also serving one to any user involves checking their credentials. That is potentially your bottleneck under high loads and special care may be needed to handle it. A normal CMS, however, will be dealing with the same credential check over and over doing any sort of other queries, so it’s unlikely that the added key check would make a significant impact on the bigger picture.

So all in all, your platform would be just as scalable as it was before adding copy protection to it.

Is it stable/reliable?

A few variations of the solution are running in production, oldest for more than 2 years. While I’ve seen no issues with the architecture in itself, there have been some quirky implements that required extensive troubleshooting. As putting this together is a multidisciplinary effort, and the setup is somewhat atypical, there is room for misunderstandings. If handled improperly, authorized viewers may be wrongfully denied access or, on the flip side, content may be viewed illicitly by unauthorized viewers or even hotlinked to, and able to be publicly viewed via other sites or apps. Do understand that the main point of copy protection is to deny access to all but desired views and thoroughly test all use cases. Also, as there’s no way to send a message to the player about the reason of being denied access, do not rely on the key serving mechanism for anything other than preventing deliberately illicit access to the content.

Is it effective?

For what it’s worth, there’s no viable way to safeguard against screen recording. Call that the “analog hole” although it has meanwhile turned digital, if there is will to obtain an illegal copy of something, then there is a way, may that mean that it will have a lower quality than the original.

That said, the solution admirably manages to:

- Make your content impossible to be viewed from another site or app

- Make your content impossible to be viewed via shared .m3u8 links

- Make your content impossible to be viewed on your site/app unless authorized

- Cost nothing

- Be compatible with all modern browsers and platforms

Can I use it to stream studio content or mainstream sports?

Probably not. The licensor of these may require you to use full-blown DRM. And for good reason, as they can’t trust a custom implement, and don’t have the means to audit it. Instead they will prefer to rely on tried and true schemes and providers. Each have their own requirements so be sure to ask.

You will still be able to use it for most non-mainstream content though, like indie films/music, college sports, and lots of other stuff.