Millions, with an M

You’ve seen it around on big guys’ platforms. But when trying to put together your own you may have hit the price, maintenance, or scalability wall.

The following solution is no magic fix to these and surely not special, yet it may help you understand the pitfalls and tell apart the clues ahead of time.

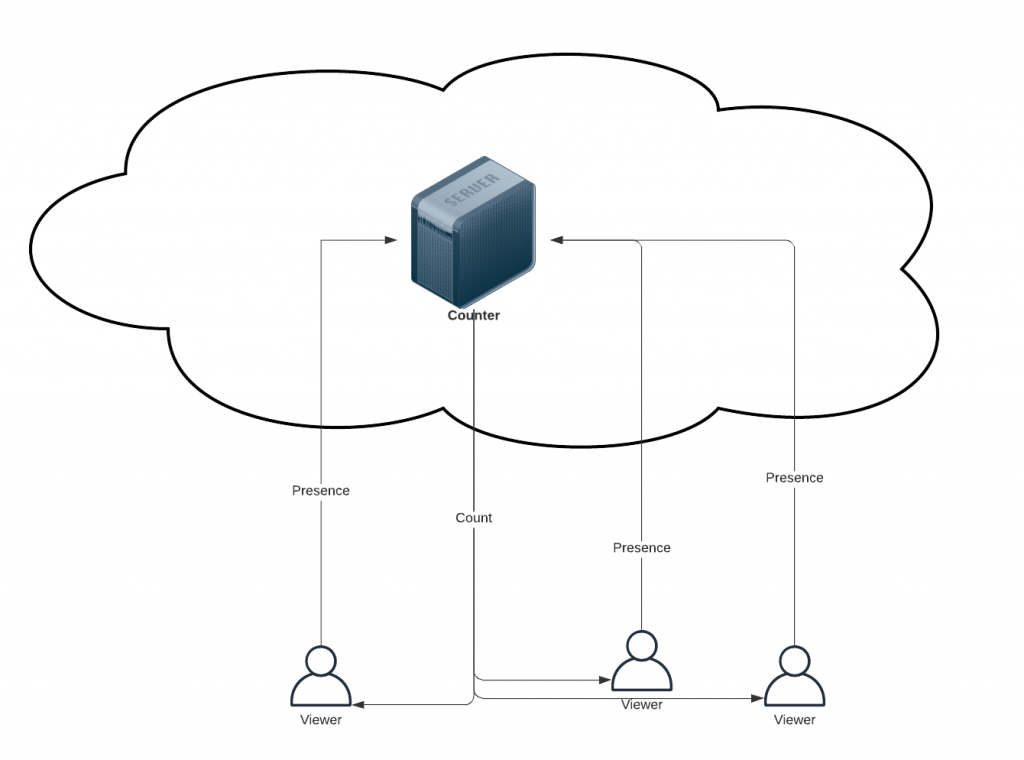

How it works

As simple as you’d imagine. Each viewer announces its presence to a central authority, let’s call that the counter. As soon one new such presence is announced, all the viewers are notified that the audience count has increased. Also, as soon as any viewer disengages, the others get notified that the respective count decreased.

Persistent connection

To facilitate instant updates, a continuously open connection is required between any one viewer and the centralized counter. Having the former just ask around for the number every once in a while (i.e. polling) is still an option but won’t be nearly as smooth or fast.

Sockets

Such connectivity can generally be accomplished by means of sockets. Long story short, a socket is a kind of nearly-instant bi-directional data channel between 2 network-connected devices.

WebSockets

Many most apps are commonly able to liberally create and make use of regular sockets, however the restrictive context of an internet browser cannot. Special abstractions had to be figured to bring socket-like functionality to the browser, of which the WebSocket has surfaced and is finally widely supported.

The server

The so called counter is merely a piece of software; it has to reside on a publicly accessible, always-on computer or device that oldsters like to call a server; while it does a lot of things, a server’s main job is to ‘serve’ common needs of various other (not necessarily so public or available) devices, generally referred to as ‘clients’.

The ready to use solution

Is here for grabs. Variations have been implemented on multiple platforms and it’s stood the test of time.

How cheap is it?

You’ll just be paying for the ‘server/s’. Unless you can run the counter on one of your existing computers [remember it needs to be public] or take advantage of some cloud’s free tier (in which case it’s free, as in beer).

In production, consider budgeting $1 per 1k simultaneous viewers per month, prorated.

Does it scale?

Not without headaches.

The boxed solution is well optimized and proven to accommodate some 10k viewers when running onto the smallest available cloud instance (with just 0.5 GB of RAM!)

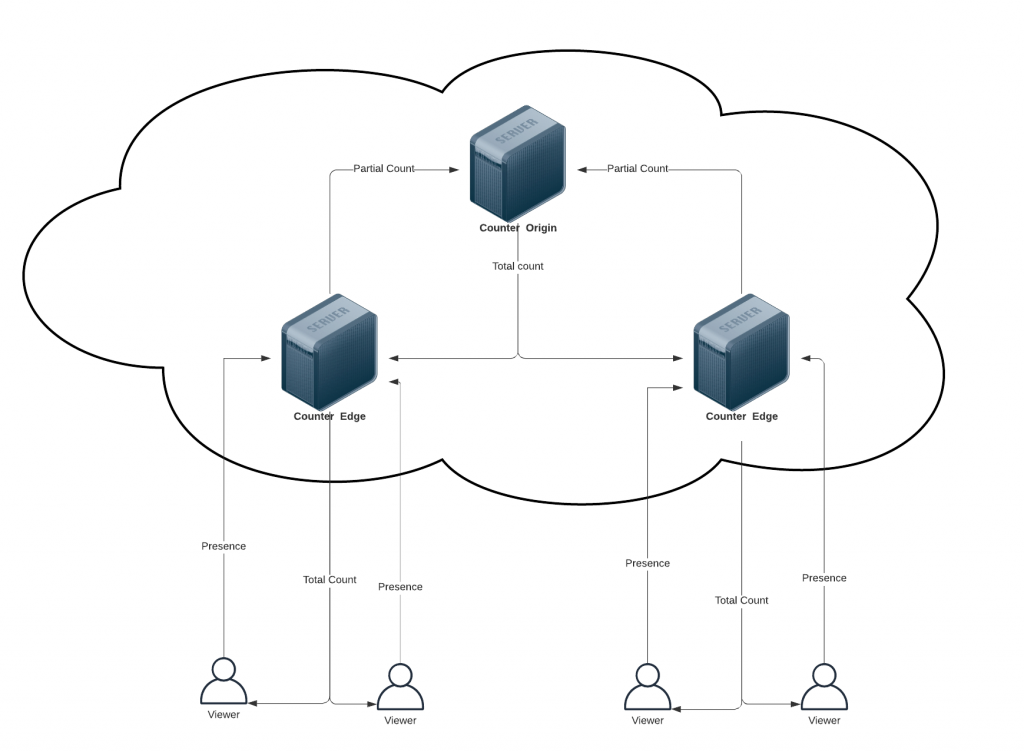

It can stretch to take up to maybe 4-5 times that much on a single computer but the truly scalable setup takes an autoscaled cluster of ‘servers’. Not too complex really, it has been done a repeatedly and hope to get the time to dust one up and make it public soon.

Is it stable/reliable?

Up to a point…

- You’ll see it hogging the host’s CPU way before it starts being laggy to your viewers. Run it on a more powerful computer next time you expect a similar or larger audience

- Memory use hasn’t been a real concern in any of the implements

- If noticing a rather constant limit your counter never goes above, your setup (either the software, OS or NIC) may be running out of sockets it can simultaneously keep open. There are many ways to mitigate that, details vary with specifics of the environment

- Before WebSockets were ubiquitous, long-polling (and at times its creepier cousin short-polling) was the norm for setting up persistency in the browser; these put a more severe burden on the server and are safe to be avoided, at long last; don’t give in to the likes of socket.io unless you really know what you’re doing

Is it secure?

In the example it’s not. Meaning that if one wanted to impersonate extra viewers into your pool they could easily do so. Also go the (DoS) extra mile and try to bring the ‘counter’ and the server hosting it to its knees, by impersonating a jolly bunch of extra viewers.

Not to say it can’t be made safe. CORS and SSL are the first things to consider. Also some simple way to limit rate and payload size.

Next up, any extra validation, authentication, tokenization etc. will take a slight toll on the server resources, multiplied by one of the numbers above. So be wary and benchmark each addition.

Is it fast?

Yesss! As fast as you’d expect an update to propagate over the internet these days, at half the speed of light if lucky.

Sounds like a simple task, why is it so hard to scale?

Think the following scenario: 100 average viewers, each coming in or out every 10 minutes. That’s 10 updates per minute, to propagate to all of the 100, for a total of 1000 updates per minute.

Now for 1000 average viewers, each also coming in or out every 10 minutes. That’s 100 updates per minute, to propagate to all of the 1000, for a total of 100k updates per minute.

Take that for 10k average viewers, it’ll be 10M (!) updates per minute. And that’s just averages, real life will show you that the audience tends to flock in the beginning and key moments of an event.

Ok, there are tricks to smooth out the treacherous exponential there, and you know one of them already. Display 1.7k viewers instead of 1745 viewers. That’s a hundred-fold reduction in the number of updates, out of the box! And there’s more to be done of course.

I followed the link but there is only a ReadMe and License published. Is there any code published on this?

https://github.com/nimigeanu/live_viewer_counter/issues/1