…obviously streaming and storage costs affect the budget greatly

It has been easy for a while, just pointing the CDN against your single HLS muxer (Wowza, Nimble, Flussonic, Ant, Nginx etc). More often than not it works, and it stretches to serve as many viewers any marketing can bring. But not as many broadcasters.

You know it’s a matter of time before capacity or failover needs will force you to come up with a multiple origin setup. And none of the top vendors offer a straightforward solution for it. No surprise, they’d rather lure you into using their cloud 🙂

Answering “what stream is where” is just a hash table away, yet in a global and scalable context resolving it extremely fast, over and over, is far from trivial.

Most solutions I’ve seen either use specialized edges (like this) or employ distinct CDN branches (i.e. distributions) for every origin. While the former is either expensive or comes with extra development (read extra bugs), latter is unfeasible or impossible for a fluctuating number of origins, like if you’d want to autoscale these.

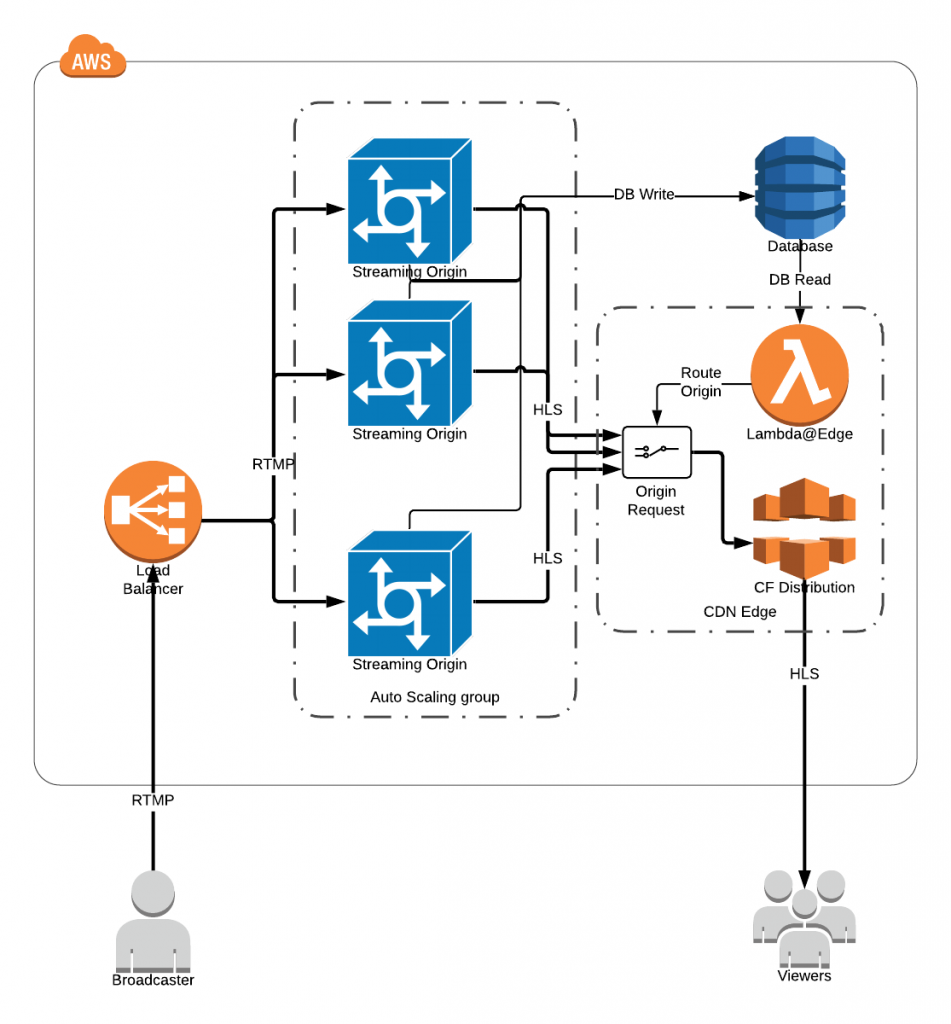

The proposed solution makes use of Lambda@Edge on top of CloudFront. The origins are customized to simply leave a note of every stream that is being broadcast to it into a database (i.e. DynamoDB), while a Lambda function at the edge level retrieves that value and, based on the result, routes HLS calls to the proper edge.

It has been deployed on a platform that hosts an ever-fluctuating number of broadcasters, with dynamics so arbitrary they’re just impossible to predict. This required an autoscale setup for the origin count, and we also wanted to separate the origin-edge logic from the main application. Architecture looks like this:

While client chose to remain unnamed, they consented to publishing the solution. Here goes.

Is it fast?

Blazing! It is substantially accelerated by CDN caching (calls to the same objects will be routed to the same origin without further resolution), explicit caching built into the Lambda function, and (possibly) DynamoDB internal caching (observe subsequent requests for the same value are faster, even from different locations).

While some HLS calls will be inherently delayed (mostly the first few), edge resolution hardly slows it down at all in the big picture.

Is it expensive?

I’ll say no but I can’t give you the figures. As Lambda acts at the ‘origin-request’ level, only a fraction of the requests will invoke it. And the explicit caching (see lambda code) makes it so only a few of the invokes actually request data from the database. All in all, expect to be paying pocket change for Lambda and DynamoDB for any audience, literally.

Is it worth it compared to other solutions?

Once again, depends on what you’re after. This particular project started off with its own set of confines, AWS/CloudFront and Wowza included.

If you’re using something other than Wowza, setting up a compatible notifier will be pretty easy.

For a CDN other than CloudFront, I don’t know if something similar to Lambda@Edge does exist, or if there’s a way to make it equally quick.

Any (fast) database setup would work in place of the DynamoDB, however think that it needs to be globally available for the array of edges to access, so a non-SQL, AWS-hosted database will make most sense in the AWS network. An in-memory store (Redis) has been considered, but it came with just superficial increases in speed yet substantial extra cost and maintenance requirements.