2 second delay is fine…

We’ve witnessed HLS and MPEG-DASH streaming solutions taking over RTMP in just a few years, yet these are inherently delayed from real time due to their segmented nature. And we are talking default end-to-end lags of over 30 seconds. While for some live streaming setups a sub-minute long delay is harmless, others are compelled to reduce the latency to the very minimum. Think live auctions, gambling, or trading platforms.

To be clear, HTTP streaming and its big delays are no mistake. It leverages the ubiquity of HTTP, gives your player time to adapt to network fluctuations, doesn’t rush the muxer to output slices or the edges to cache them. It’s friendly to ABR. And it’s hugely beneficial to the CDN industry 🙂

While there are no protocol limitations when delivering to mobile apps, and you can easily stream over RTMP or RTSP, the browser is very restrictive and not at all straightforward to live stream to or from.

For quite a while, online platform implementers held on to RTMP, but as support for it eventually went away, the community had to adapt. Many of us tried pushing the HLS and DASH to its limits (shorter segments and shorter playlists), but that soon proved to be far from ideal, as playback smoothness would suffer on all but the best connections.

You’ll run into more and more companies and individuals willing and able to put together a custom low-latency solution nowadays. Moreover, the rise of HQ Trivia seems to have stimulated many to bring their approach to the the masses and offer plug-and-play solutions. You may hear stories of ground-breaking technology but really, they all fall into into one of the 3 categories:

- WebRTC based – WebRTC is not particularly new to the game, yet it’s still not ubiquitous, and it may still be a while before it is; providers like Twilio or Red5Pro offer easy to integrate SaaS or hosted solutions on top of it and CDNs like LimeLight are building it into their networks. If done right, you can expect sub-second latencies out of WebRTC, even across long distances and poor network connections

- WebSocket based – every modern browser supports WebSockets; while not trivial, a high-level protocol can be implemented on top of it and successfully stream video from server to client; at least in theory, capabilities similar to RTMP can be achieved. As WebSockets run over TCP (in turn WebRTC can use UDP) and the added protocols introduce overheads, expect a latency of 2-3 seconds out of any approach; providers like Wowza, Nanocosmos and Nimble offer solutions based on it, while CDNs like CloudFront and CloudFlare lately support WebSockets

- Chunked-transfer based – chunked-transfer is built into HTTP 1.1, and makes it possible for “chunks” of data to be written to, and read from, the network before the whole data is available. Provided compatible encoder, infrastructure, and decoder, this can be taken advantage of to output and playback “not-yet-complete” video segments, and significantly reduce the latency of segment-based protocols. The technique is being employed with promising results for HLS, DASH, and newly CMAF. Companies like Periscope or TheoPlayer offer proprietary solutions based on it, and a few open approaches can be found online. Chunked-transfer is supported by some CDNs. Expect a latency of under 5 seconds, it will greatly vary with the specifics of the implement.

So what’s the best of them?

There isn’t one… Client has been presented the above knowledge and options, among others. Long story short, a solution based on Nimble was chosen. They agreed to make it public but asked to remain anonymous. And here it is for you to deploy in just a few clicks.

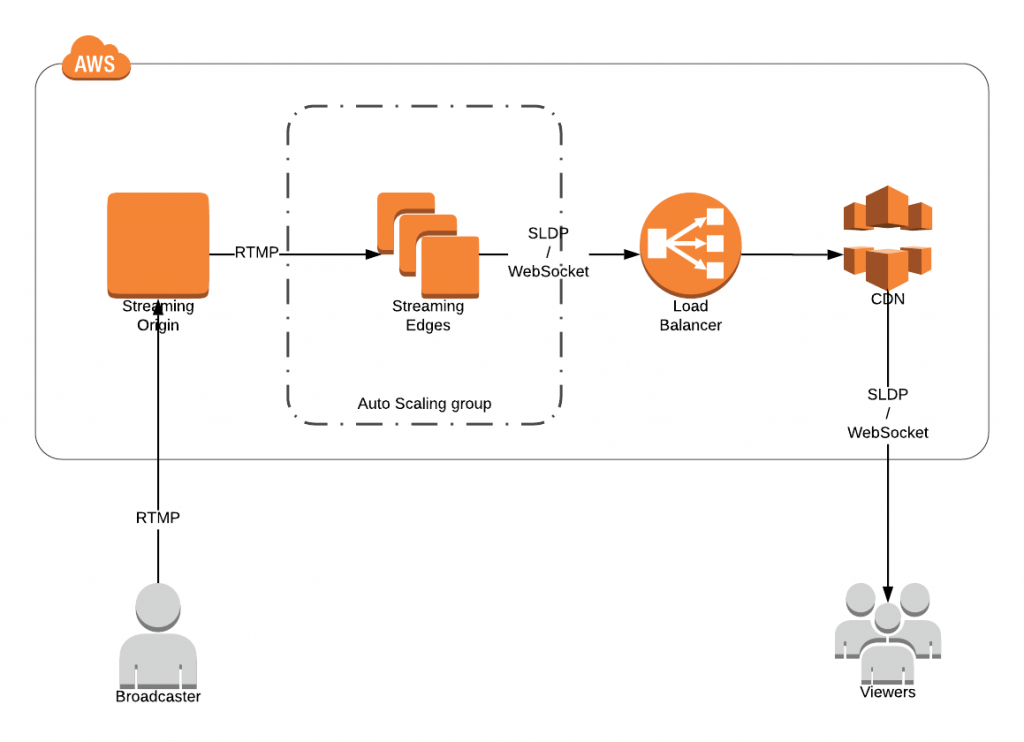

Does it scale?

Yes, viewer-wise. It has held up to hundreds of thousands and I see no reason it can’t do more. In case of sudden spikes though, the Auto Scaling group is set to only fire up a new edge every 5 minutes so you’d want to manually intervene if you expect a riot.

Broadcaster-wise, no. As per the specs, it would only need to stream a handful of streams. Sure, there are ways to turn this into a bunch or a million.

Is it stable?

Very. It works like a charm and it’s production sound out of the box. Don’t take my word for it, try it out.

Is it worth it?

Depends on what you’re after. At the time (summer-fall 2018) Nimble’s has been the preferred option; cost, reliability, and capability to deploy on own infrastructure taken into account. Worthy competitors were Nano, Wowza and Red5Pro.

Where’s the diagram?

Here it is, sorry…